OpenAI just announced the Release of GPT-4 for their Plus members and the waiting list for those that are interested in trying it out it’s API as soon as possible.

The GPT-4 Developer live stream will be on YouTube as well!

In this article, we will explore how GPT-4 works; let’s dive in immediately and see what it is capable of.

What is GPT-4, and how does it differ from GPT -3.5?

OpenAI’s GPT-4 release is an eagerly anticipated development in the field of artificial intelligence. As the successor to the groundbreaking GPT-3, GPT-4 promises to push the boundaries of what is possible with natural processing (NLP) and machine learning.

To understand the potential impact of model-4, it’s important to first understand the capabilities of its predecessor, model-3. Released in 2020, GPT-3 is widely regarded as most advanced natural language processing model in existence. With 175 billion parameters, GPT-3 is capable of generating human-like responses to text prompts, answering questions, and even writing coherent and engaging stories. Its impressive performance has led to it being hailed as a major milestone in the development of artificial intelligence.

What to expect from GPT-4?

First and foremost, we can expect an increase in the number of parameters. While the exact number has not been confirmed, it is expected to be significantly higher than GPT-3’s 175 billion. This parameter increase will allow GPT-4 to be even more precise in its understanding of natural language and will likely lead to even more human-like responses.

Another potential improvement is the ability to perform more complex tasks. model-3 can perform a wide range of NLP tasks, but there are still limitations to its capabilities. For example, it struggles with tasks that require a deeper understanding of context or require knowledge outside of the text prompt. However, model-4 may be able to overcome some of these limitations, potentially opening up new possibilities for NLP applications.

One potential area of improvement is the ability to perform more complex reasoning tasks. model-3 is capable of performing basic logical reasoning, but it struggles with more complex forms of reasoning. The model -4 may be able to overcome some of these limitations, potentially opening up new possibilities for NLP applications.

Another improvement is in the ability to perform more complex tasks that require knowledge outside of the text prompt. For example, GPT-3 struggles with tasks that require knowledge of the physical world, such as understanding how a car works. GPT-4 may be able to overcome some of these limitations by incorporating knowledge from external sources, such as images or videos.

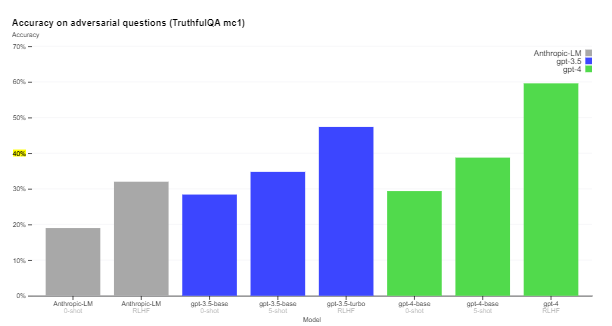

One of the most exciting potential improvements is in the area of conversational AI. GPT-3 is capable of generating human-like responses to text prompts, but it struggles with maintaining a coherent conversation. GPT-4 may be able to overcome some of these limitations by incorporating a better understanding of context and dialogue flow, potentially leading to more human-like conversations. It scores 40% higher than GPT-3.5 on their internal adversarial factuality evaluations.

In addition to these potential improvements, it’s also worth noting that model-4 is likely to be more energy-efficient than its predecessor. model-3 requires a significant amount of computing power to run, leading to concerns about the environmental impact of AI development. GPT-4 may be able to achieve better performance while using less energy, potentially reducing the carbon footprint of AI development.

So, what will be the key differences between model-4 and model-3.5? As the name suggests, model -3.5 is an incremental improvement on model-3, rather than a major new release. It is expected to have a similar number of parameters to model-3, but with some improvements in its ability to perform certain NLP tasks.

In addition, a feature that could be included in GPT-4 is the ability to hold multi-modal information, meaning it can understand and generate different forms of data such as images, video, and audio. This feature would make it a much more versatile tool and could be used in various fields, including computer vision, speech recognition, and natural language processing.

Performance Improvements

GPT-4 has shown substantial performance enhancements over the model-3.5 across a wide range of benchmarks and tasks. Its impressive capabilities include passing a simulated bar exam with a score around the top ten percent of test-takers, while GPT-3.5 scored around the bottom 10%. Model-4 has also demonstrates better factuality, steerability, and adherence to guardrails.

On the MMLU benchmark, which consists of multiple-choice questions in 57 subjects (both professional and academic), GPT-4 achieved an 86.4% accuracy in a 5-shot evaluation, compared to GPT-3.5’s 70.0%. Similarly, on the HellaSwag benchmark, which tests commonsense reasoning around everyday events, GPT-4 scored 95.3% in a 10-shot evaluation, while GPT-3.5 managed 85.5%.

AI2 Reasoning Challenge (ARC), a benchmark with grade-school multiple-choice science questions, saw GPT-4 reach a 96.3% accuracy in a 25-shot evaluation, compared to GPT-3.5’s 85.2%. Additionally, in the HumanEval benchmark involving Python coding tasks, GPT-4 achieved a 67.0% accuracy in a 0-shot evaluation, while GPT-3.5 lagged at 48.1%.

They incorporated more human feedback, including feedback submitted by ChatGPT users, to improve GPT-4’s behavior. They worked with 50 experts or more for early feedback in domains including AI safety and security. They’ve applied lessons from real-world use of their previous models into GPT-4’s safety research and monitoring system.

Conclusion

The release of model-4 marks a significant leap forward in the field of artificial intelligence. This latest model outperforms its predecessor, model-3.5, in numerous benchmarks and tasks, showcasing improved factuality, steerability, and guardrail adherence. Its ability to accept visual inputs and offer greater control over style and tone further highlights its potential. This has been an incredible run from OpenAI, they have been putting in the work.

Relevant Articles:

Bing’s Success: How it Reached 100 Million Daily Users

AlphaGo to Quantum Computing: Google Bard or Microsoft Bing

The Top 10 AI Companies to Watch in 2023: Revolution of AI