In yesterday’s article we covered emergent abilities of GPT-4 superficially; Altman’s GPT-5 claims and AGI “The Path Ahead”.

Whereas, today we’ll delve deeper into emergent abilities.

The article primarily focuses on the emergent abilities of large language models, the factors that contribute to these abilities, potential explanations for emergence, and directions for future work. It also touches on the broader impact of emergent abilities, including emergent risks.

Once upon a time, the world of natural language processing (NLP) was a place of neatly defined tasks and carefully crafted models.

Language models were trained to perform specific tasks, and researchers knew exactly what to expect from them.

However, as the world of NLP began to evolve, something unexpected happened. Language models started to exhibit behaviors and capabilities that nobody had seen before.

These mysterious abilities seemed to appear out of nowhere, puzzling researchers and prompting a quest to unravel the secrets behind them.

This is the story of the phenomenon of emergent abilities in language models a journey into the unknown.

When Language Models Surpass Expectations

In the not-so-distant past, language models were trained to perform specific NLP tasks, such as sentiment analysis, machine translation, or text summarization. These models were task-specific, and their performance could be predicted based on their architecture and training data. But as language models grew larger and more powerful, researchers began to notice something strange. These models were developing abilities that went beyond their intended use.

It started with a few curious cases. A language model trained on billions of parameters could suddenly solve complex math problems, answer trivia questions, and generate coherent text in multiple languages. At first, these abilities were intriguing, but researchers soon realized they were witnessing a phenomenon known as “emergent abilities”.

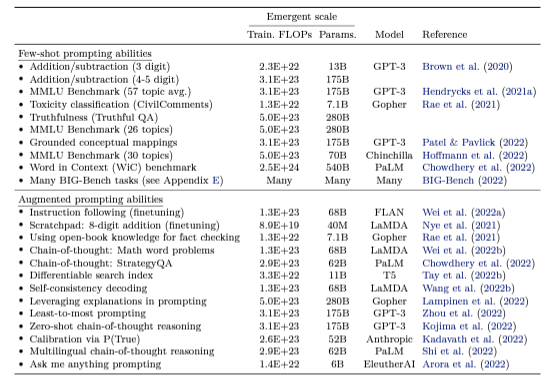

Emergent abilities are those that manifest in language models only when they reach a certain scale. It’s as if the models are suddenly “awakened” and granted new powers that were not explicitly included in their training. These abilities can span a variety of tasks and are not limited to any specific model or architecture.

For example, imagine a language model that was trained on a vast dataset of texts from various sources. One day, a researcher decides to test the model’s ability to solve math word problems. To their surprise, the model not only provides the correct answer but also generates a detailed chain of thought explaining the steps it took to solve the problem. This ability to provide intermediate steps, known as “chain-of-thought prompting”, is an example of an emergent ability. It wasn’t explicitly taught to the model, yet it surfaced when the model reached a certain scale.

The Unpredictable Nature, Why We Can’t See It Coming

The emergence of new abilities in language models is an unpredictable and enigmatic process. Researchers can’t simply extrapolate the performance of smaller models to predict what larger models will do. In some cases, abilities only emerge when a model reaches hundreds of billions of parameters, a scale known as “training FLOPs (floating-point operations per second)”.

To illustrate this, picture a language model as a gigantic maze. Within this maze are hidden abilities, waiting to be discovered. As researchers scale up the model, they explore deeper into the maze, uncovering new abilities along the way. But there’s no map to guide them, and they never know what they’ll find around the next corner.

The phenomenon of emergent abilities has profound implications for the field of NLP. It raises questions about how and why these abilities emerge, and whether further scaling could unlock even more capabilities. It also challenges our understanding of how language models acquire knowledge and how they process information.

The Risks: When Emergence Becomes a Double-Edged Sword

The emergence of new abilities is not without its risks. As language models grow larger and more powerful, they may also exhibit behaviors that are harmful or undesirable. These “emergent risks” include issues such as toxicity, bias, and the potential for deception.

Consider the case of a language model that is trained on a diverse dataset that includes both factual and biased content. When tested on a task related to gender bias, the model might exhibit troubling behavior. For instance, it might associate certain occupations with specific genders, such as nurses being female and electricians being male. This type of bias is an example of an emergent risk that arises from the training data and becomes more pronounced as the model scales.

Toxicity is another emergent risk that researchers must grapple with. In some cases, larger language models might produce toxic or offensive responses when prompted with certain inputs. The challenge for researchers is to mitigate these risks without stifling the model’s creativity and versatility.

The ability to mimic human falsehoods is yet another emergent risk. As language models scale, they may become more adept at generating content that sounds plausible but is factually incorrect. For example, the TruthfulQA benchmark showed that larger GPT-3 models were more likely to generate responses that mimicked human falsehoods, raising concerns about the potential for misinformation and disinformation.

While emergent abilities can unlock new possibilities and applications, emergent risks highlight the need for careful oversight, governance, and ethical considerations. Researchers must find a delicate balance between harnessing the power of language models and mitigating the risks they pose.

Pushing the Boundaries of What’s Possible

Despite the challenges and uncertainties, the exploration of emergent abilities has led to exciting innovations in NLP. Researchers are continually pushing the boundaries of what’s possible, unlocking new abilities, and improving the performance of language models.

One of the key innovations is the concept of few-shot prompting. Rather than training a model on a specific task, few-shot prompting involves providing the model with a few examples of a task and then asking it to generalize from those examples. GPT-3, Chinchilla, and PaLM are prime examples of language models that have achieved state-of-the-art performance on a range of tasks using few-shot prompting.

Another innovation involves improving model architectures and training procedures. Techniques such as sparse mixture-of-experts architectures and variable amounts of compute for different inputs have shown promise in enhancing computational efficiency while maintaining high-quality performance. Additionally, augmenting models with external memory and using more localized learning strategies are nascent directions that have the potential to transform how language models operate.

Scaling the training data is also crucial to the emergence of new abilities. Training models on larger and more diverse datasets allows them to acquire a broader range of knowledge and skills. For example, training on multilingual data has led to the emergence of multilingual abilities in language models, enabling them to understand and generate text in multiple languages.

Overall, the innovations in the field of NLP seems to have opened up a world of possibilities for language models, from translating natural language instructions into robotic actions to facilitating multi-modal reasoning and interaction.

The “We must slow down the race” campaign seems to still be on going:

https://www.youtube.com/watch?v=qOoe3ZpciI0

,”The video above is from “AI Explained”, and all rights belong to their respective owners.”

A World of Limitless Potential

As we venture further into the world of emergent abilities, the future looks bright and full of potential. Researchers are continually exploring new ways to scale language models, improve their performance, and unlock new abilities. The road ahead is filled with unknowns and challenges, but it is also paved with opportunities.

- What new abilities will language models develop in the future?

- Will they be able to compose music, write novels, or solve complex scientific problems?

The responses to these inquiries remain veiled in secrecy, but one thing is clear: the phenomenon of emergent abilities is reshaping the landscape of NLP and opening the door to a world of limitless potential.

In this ever-evolving journey, researchers, developers, and users must tread carefully, remaining mindful of the risks and ethical considerations that accompany emergent abilities. With the right approach, language models have the potential to revolutionize how we communicate, learn, and interact with the world around us.

As we stand on the verge of this new era, let us embrace the spirit of exploration and discovery. The story of emergent abilities in language models is still being written, and each new chapter brings with it a sense of wonder and excitement. Whether we are unlocking the ability to engage in complex reasoning or crafting artful prose, language models are becoming an integral part of our lives, enhancing our creativity and enriching our experiences.

In the realm of artificial intelligence, emergent abilities represent the fusion of human ingenuity and machine learning. They serve as a testament to our ability to innovate and adapt, even in the face of the unknown. As we forge ahead, we must also remember our responsibility to use these powerful tools for the greater good. The potential for positive impact is immense, whether it be in education, healthcare, entertainment, or scientific research.

Imagine a future where language models can serve as virtual tutors, providing personalized guidance to students around the world. Imagine a future where language models can assist doctors in diagnosing and treating diseases, or where they can help researchers make groundbreaking discoveries in fields like physics and biology. These scenarios are not mere fantasy, they are within reach, and the exploration of emergent abilities is bringing us closer to making them a reality.

The journey of emergent abilities is not a solo endeavor. It is a collaborative effort that involves researchers, engineers, ethicists, policymakers, and users. Together, we can shape the future of language models, ensuring that they are developed and deployed methods that benefit the entire society.

As we continue to unlock the secrets of emergent abilities, let us also celebrate the diversity of human language and culture. Language models have the unique ability to bridge linguistic and cultural barriers, fostering greater understanding and connection among people from all walks of life.

In conclusion, the phenomenon of emergent abilities in language models is a captivating and multifaceted topic. It challenges our assumptions, sparks our curiosity, and invites us to think critically about the role of artificial intelligence in our world. As we navigate the complexities of emergence, we are reminded of the boundless potential of human creativity and the power of collaboration.

With each new day, language models are learning and evolving, just like us. They are becoming more capable, more versatile, and more attuned to the nuances of language. In this ever-changing landscape, the possibilities are endless, and the future is bright.

So, as we embark on this journey into the unknown, let us do so with open minds, curious hearts, and a sense of adventure. After all, the story of emergent abilities is a story of exploration, discovery, and growth a story that is only just beginning.

Note: The views and opinions expressed by the author, or any people mentioned in this article, are for informational purposes only, and they do not constitute financial, investment, or other advice.

Relevant Articles:

Meet X.AI Elon Musk’s New AI Venture: The Future is Here

OpenAI CEO Sam Altman on GPT-4: Risks & Opportunities of AI

GPT-4 Can Improve itself: How the AI Giant Learns from Its Mistakes