Last Article’s Recap on GPT-5: OpenAI’s upcoming release of GPT-5, the next major Large Language Model (LLM), is generating excitement and concerns in the AI community. The development faces pushback from a petition urging a pause, but OpenAI’s gradual release approach, including an intermediate GPT-4.5 version, reflects a commitment to safety and responsible innovation.

Science has always been a domain that required deep human intelligence, careful reasoning, and a curious mind. But, imagine a world where an Artificial Intelligence (AI) language model, with no physical lab or test tubes, can venture into the realm of scientific research.

That’s precisely what GPT-4, OpenAI‘s language model, is now capable of doing. In this article, we’ll explore the incredible emergent abilities of GPT-4 as it dabbles in science, and we’ll also delve into Sam Altman’s recent statement on the future of GPT-5, a much-anticipated successor to GPT-4.

GPT-4’s Newfound Talent

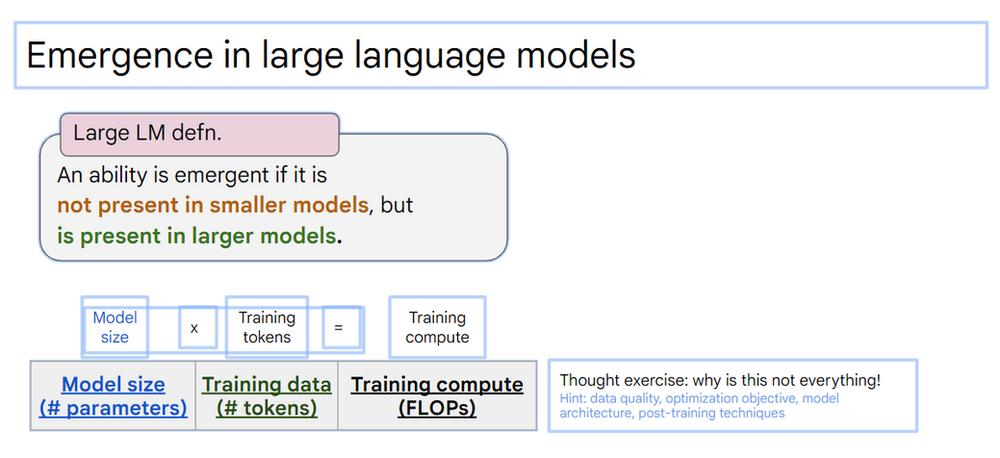

GPT-4, the latest iteration of OpenAI’s language models, has surprised the world with its “emergent abilities.” These are abilities that were not explicitly programmed into the model but surfaced as the model grew in size and computing power.

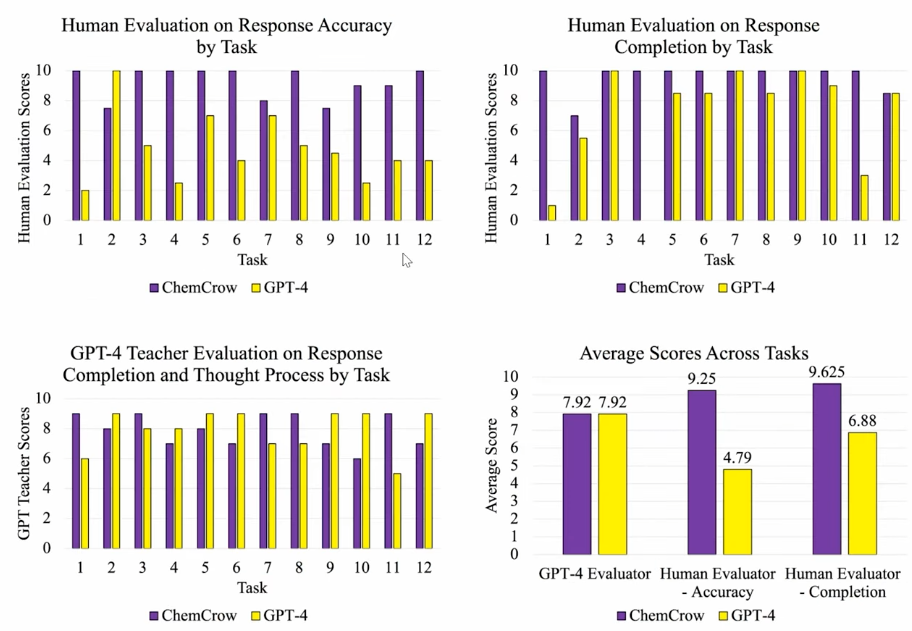

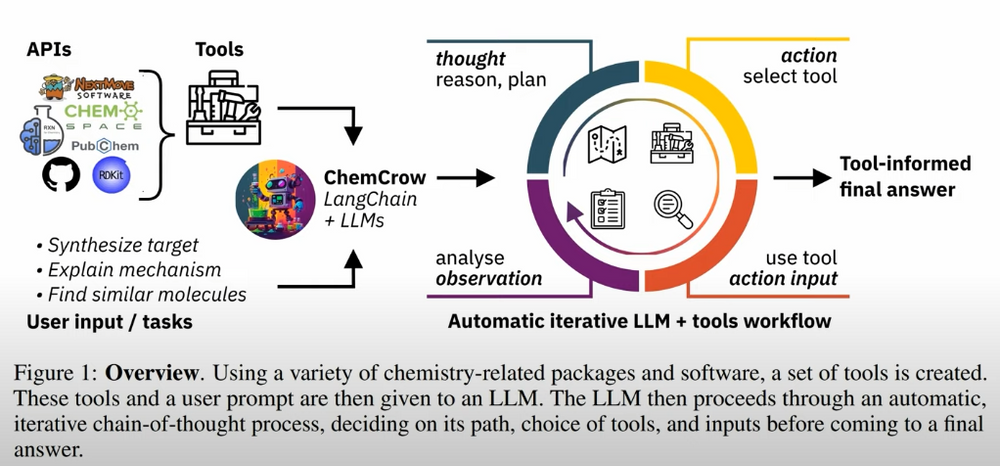

In a recent paper, researchers showcased how GPT-4 could design, plan, and execute scientific experiments entirely autonomously. Whether it was proposing similar non-toxic molecules or reasoning through complex scientific problems, GPT-4 demonstrated a knack for scientific thinking.

This is GPT-4’s performance when it interacts with tools, basically when it decides which tools to use.

One of the most fascinating aspects is how GPT-4 interacts with real-world tools. For example, it was connected to a wide array of tools, including Slack and Zapier. The language model acts as the “brain,” deciding which tools to use, and formulates plans to achieve specific scientific goals.

The significance of this development cannot be overstated. It marks a monumental shift in how AI models can contribute to scientific advancements. But, as with all great leaps, it comes with some important questions:

- What if GPT-4 proposes the synthesis of chemical weapons?

- Can we trust an AI model with such capabilities?

It’s evident that ethical considerations and guardrails must be implemented to ensure the safe and responsible use of AI in science.

Altman’s GPT-5 Statement

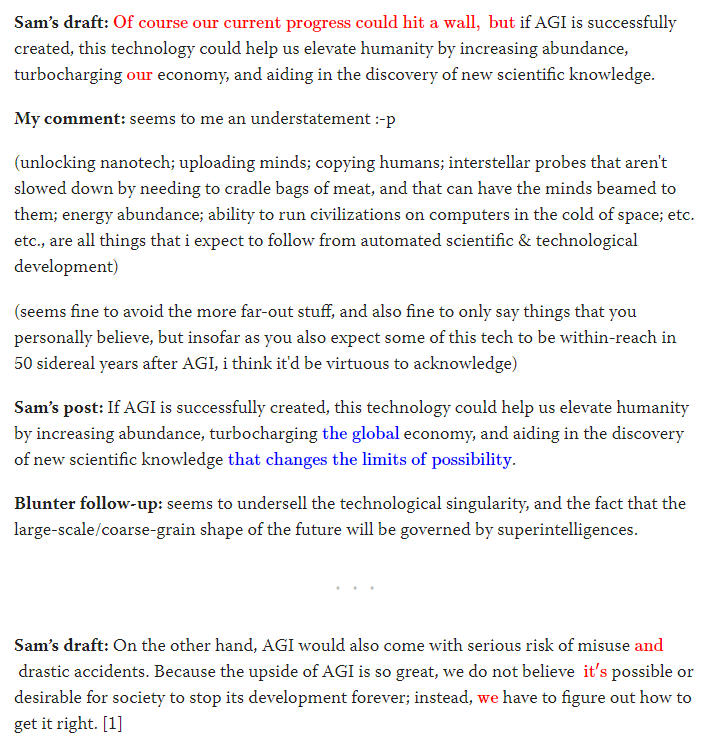

While the world is still marvelling at GPT-4’s abilities, OpenAI’s CEO, Sam Altman, made headlines with his statement regarding GPT-5. In a response to a letter calling for a pause in AI experimentation, Altman clarified that OpenAI is not currently training GPT-5. Instead, the organization is focusing on other projects on top of GPT-4 that have safety concerns.

Altman’s statement reignited discussions about the trajectory of AI development and the importance of safety in Artificial Intelligence research. It’s crucial to strike the right balance between advancing Artificial Intelligence capabilities and ensuring alignment with human values a challenge that is yet to be fully addressed.

Altman’s words also raise questions about the future of GPT-5.

- What new emergent abilities might it possess?

- What ethical considerations will arise?

- Will GPT-5 be the language model that finally scales the peaks of science and art?

AGI and the Path Ahead

The advancements in GPT-4’s capabilities and the anticipation surrounding GPT-5 are part of a larger narrative about the path to artificial general intelligence (AGI) an AI that can understand, learn, and perform tasks at or beyond human capability.

Some experts describe AGI as “Godlike Artificial Intelligence,” which raises questions about how we, as a society, will navigate a future where AGI is a reality. Altman’s call for safety progress, alongside the concerns raised by researchers, emphasizes the importance of responsible Artificial Intelligence development.

As we reflect on GPT-4’s ability to do science and Altman’s statement on GPT-5, we’re reminded that the world of AI is evolving at an unprecedented pace. It’s a world where language models like GPT-4 are no longer just tools for generating text but are becoming contributors to scientific research, capable of reasoning and problem-solving.

One example of GPT-4’s scientific prowess is its ability to design experiments autonomously. GPT-4 can propose hypotheses, develop experimental plans, and interpret results, all through its understanding of language and interaction with digital tools. This fascinating ability to engage in scientific inquiry marks a turning point in the potential applications of AI.

As we look to the future, the upcoming GPT-5 sparks curiosity and wonder.

- Will GPT-5 take us even closer to AGI?

- Will it unlock new emergent abilities that redefine the boundaries of AI?

These questions are at the forefront of Artificial Intelligence research, and the answers will shape the direction of its development for years to come.

However, alongside the excitement and optimism, there’s a sense of caution. As Altman’s statement highlighted, the path to AGI must prioritize safety and alignment with human values. The idea of AGI as “Godlike AI” underscores the potential consequences of creating an Artificially Intelligent system capable of infinite self-improvement. Ethical considerations, transparency, and public discourse are all essential elements in guiding Artificial Intelligence research responsibly.

While GPT-4’s achievements in science are impressive, we must also consider potential risks. For instance, if GPT-4 can propose non-toxic molecules, it could potentially be used to synthesize harmful substances. Guardrails and ethical guidelines must be put in place to prevent misuse and ensure the technology benefits humanity.

This discussion about AI Dilemma was recorded on March 09, 2023. There have been raised great points on how catastrophic A.I capabilities can be and pointing out the necessity to for adequate safety measures.

https://www.youtube.com/watch?v=xoVJKj8lcNQ

,“The video above is from “Center for Humane Technology”, and all rights belong to their respective owners.”

The discussion about GPT-4 and GPT-5 is not just about technical advancements; it’s also about envisioning the kind of future that is safe and how we want to create it. It’s about recognizing the power of AI to transform society and acknowledging the responsibility that comes with it.

In conclusion, the journey of GPT-4’s venture into science and Altman’s statement on GPT-5 offer a glimpse into the ever-evolving world of Artificial Intelligence. They highlight the incredible potential of AI to contribute to scientific discovery, as well as the challenges we face in ensuring safe and ethical Artificial Intelligence development. As we forge ahead, we must embrace both the possibilities and the responsibilities that come with advancing AI.

Ultimately, the goal is to harness the power of Artificial Intelligence for the betterment of humanity, while navigating the complexities of a future where Artificial Intelligence has an increasingly influential role in our lives.

Note: The views and opinions expressed by the author, or any people mentioned in this article, are for informational purposes only, and they do not constitute financial, investment, or other advice.

Relevant Articles:

Bill Gates on GPT-4: How GPT will Likely Affect The Future

GPT-4’s Sparks of AGI: AutoGPT & MemoryGPT are Game Changers

Hugging Face: The Emoji That Sparked a ML Revolution