As someone keeping a keen eye on the world of artificial intelligence (AI), you’ve likely seen a lot of talk about size. GPT-3, GPT-4, and similar AI models, with their mammoth parameter counts, have been dominating the stage.

And it’s not hard to see why – these models have an almost unbelievable breadth of knowledge and capability. But what if I told you there’s a new kid on the block who’s challenging these giants, despite being a thousand times smaller?

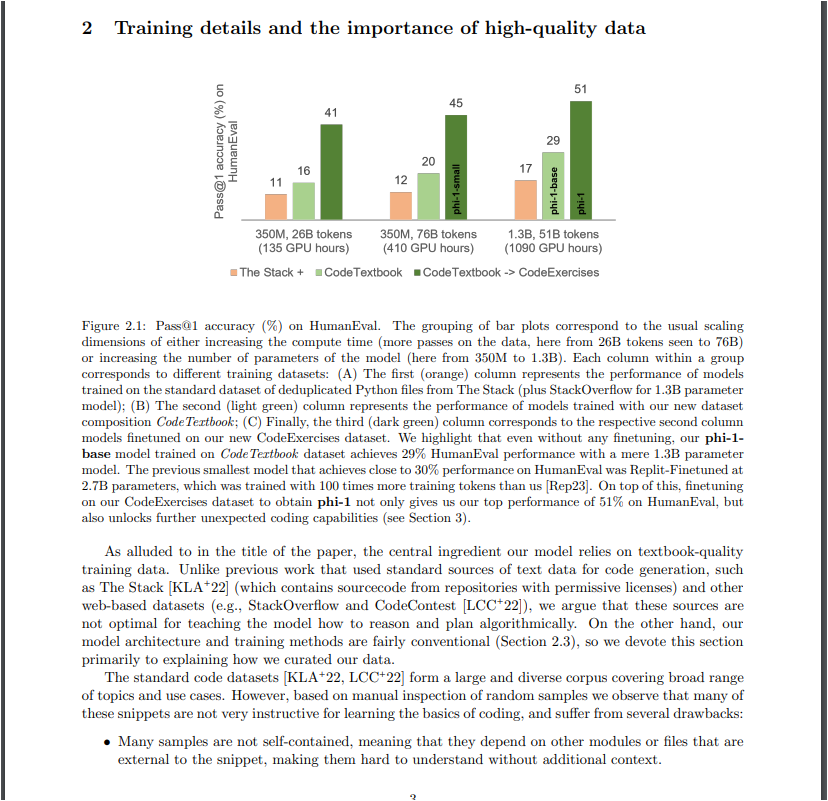

Meet Phi-1, a model that’s been described as a ‘textbook’ example of the saying, ‘size isn’t everything.’ This miniature model, boasting a mere 1.3 billion parameters, has sparked a revolution in AI, causing us to rethink what we know about these powerful tools. It’s small, it’s smart, and it’s portable – yes, this little powerhouse could even fit on your smartphone!

A Pocket-Sized Revolution

Imagine carrying around a coding expert in your pocket, available 24/7 to help you crack that tough Python problem. Sounds like science fiction, right? Well, thanks to Phi-1, this fantasy is fast becoming a reality. This model’s creators put a particular focus on Python programming tasks, making it a powerful, on-the-go coding assistant. But how can something so small hold so much power?

Quality Over Quantity

The magic lies in the quality and diversity of the data that Phi-1 was trained on. The Phi-1 team meticulously gathered data from Stack Overflow and synthesized a Python textbook using GPT-3.5, enriching their training set with exercises and solutions. It’s like the old parable of David and Goliath – Phi-1, the little model that could, is showing us that a well-aimed stone (or, in this case, a carefully curated dataset) can make all the difference.

The implications of Phi-1’s success are vast, especially when we consider the timeline to AGI (Artificial General Intelligence). Until now, we’ve thought that bigger models meant faster progress. But Phi-1 suggests that the journey to AGI might be less about size and more about the nuance of the data used for training.

Specialization: The Way Forward?

Phi-1’s specialty in Python programming is a testament to the potential of smaller models to excel at specific tasks. Imagine a world with a variety of such specialized models, each highly capable in its area. An AI model for diagnosing diseases, another for predicting weather patterns – the possibilities are endless. However, it’s crucial to remember that these models, like Phi-1, may have limitations when it comes to variations or errors in input.

A New Chapter in AI Development

Phi-1’s story is only beginning, and it’s already shaking up our understanding of AI development. It serves as a potent reminder that bigger isn’t always better. By focusing on data quality and clever scaling techniques, we can expect to see a new breed of small, specialized, and highly capable AI models.

As we edge closer to the open-source availability of Phi-1, its potential to influence our progress towards human-level intelligence becomes increasingly tangible. This model is a glimpse into the future, showing us that we can improve AI performance without simply increasing size.

This long passage seems to be discussing various aspects of the current state and potential future of AI development. Here are the key takeaways:

- Training AI with Quality Data: The importance of using high-quality training data is emphasized. This could be acquired from sources like StackOverflow or generated synthetically. The educational value of data is also mentioned; the Artificial Intelligence model might perform better if it’s trained on data deemed educational for a specific purpose, such as learning to code or learning French.

- Application of Synthetic Training: The potential for synthetic training to be applied in various fields is suggested. For example, Artificial Intelligence could be used to predict stock market trends or improve language learning.

- AI Capabilities and Limitations: Despite the advancements, the limitations of current AI models are acknowledged. For instance, they often lack domain-specific knowledge or struggle with variations in language and style. However, there’s optimism that fine-tuning these models can yield substantial improvements, even in areas not directly targeted during training.

- Future of Artificial Intelligence Development: The discussion speculates on a future where AI progress isn’t primarily driven by increased compute power (Moore’s Law), but instead by the quality of task-specific datasets. This could lead to a multitude of specialized AI models, a shift away from the pursuit of ever-bigger general models.

- Safety and Ethical Considerations: Concerns are raised about potential misuse of Artificial Intelligence, particularly in biological fields where it might enable the creation of dangerous pathogens. Safety and ethics need to be a significant consideration as AI continues to advance.

- AI Timelines: There’s speculation on the timeframe for achieving transformative Artificial Intelligence capabilities. It’s suggested that within five to ten years, we may see whether advances in data, algorithms, and compute power will lead to artificial general intelligence (AGI) or superintelligence.

- Economic Implications of AI: The economic aspect of Artificial Intelligence development is also discussed. If an AI model can generate substantial returns, there’s a huge incentive to invest in its development and the infrastructure to support it.

This passage seems to reflect an ongoing conversation about the progress, potential, and risks of AI development. It’s a complex and rapidly evolving field with significant implications for society, economy, and global security.

Conclusion

In summary, the creation and success of the Phi 1 model opens up many new questions and possibilities in the realm of artificial intelligence and machine learning. It’s a significant step that tells us a lot about where we might be heading and offers important insights about the dynamics of quality and quantity in data, the potential of smaller, specialized models, and the uncertain timeline towards AGI.

Phi-1 is undoubtedly a game-changer in the AI world. This compact yet powerful model is not just a testament to the progress we’ve made but also a beacon guiding us towards a future filled with innovative, specialized, and efficient AI models. As we stand on the cusp of this exciting new era, it’s worth taking a moment to appreciate the transformative power of quality data and creative scaling. As Phi-1 demonstrates, size isn’t everything – sometimes, it’s the little things that make the biggest difference.

Note: The views and opinions expressed by the author, or any people mentioned in this article, are for informational purposes only, and they do not constitute financial, investment, or other advice.

Relevant Articles:

GPT-4 Gets an Upgrade: Code Interpreter Breakdown

Top Data Annotation Companies: Leaders in Labeling Data for AI

GPT-4’s Sparks of AGI: AutoGPT & MemoryGPT are Game Changers